Intersection of Deep Neural Network Compression and Explainability

Deep neural networks (DNNs) are increasingly employed across various domains, but their high computational and memory demands and their “black box” nature present significant challenges. Pruning, a model compression technique, reduces the computational load and memory footprint of DNNs, while explainability techniques aim to make model decision-making more interpretable. Despite the importance of both approaches, limited work has explored the relationships between pruning and explainability.

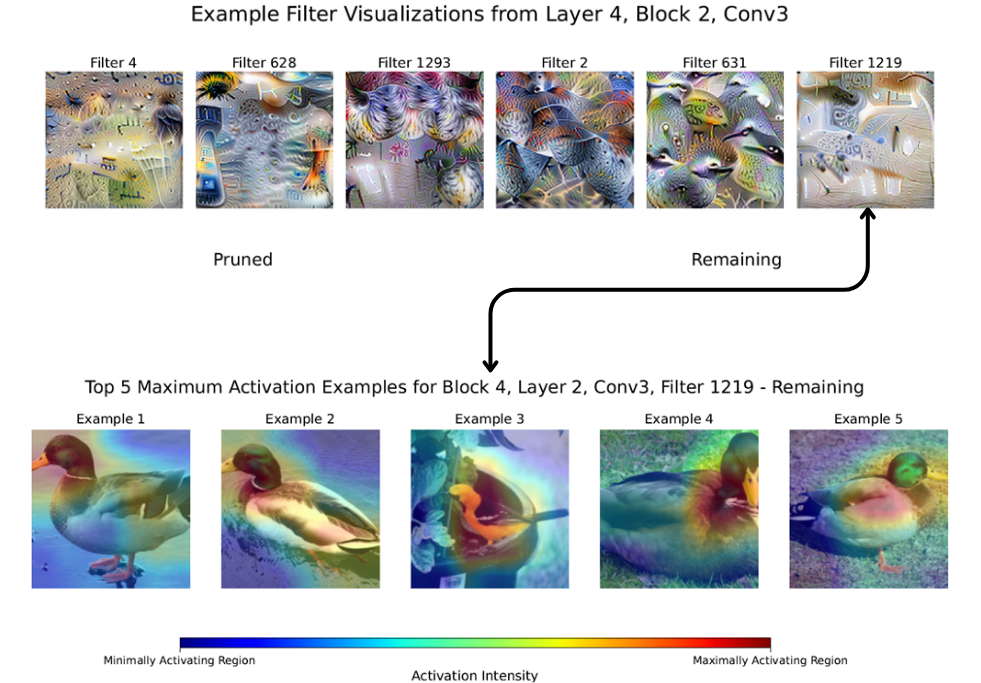

This study investigates this relationship using the ResNet50 model alongside the CUB Birds dataset. The model was pruned using both unstructured and structured pruning at various intensities. Afterwards, a suite of complementary qualitative and quantitative explainability techniques were applied to understand and explore relationships.

Findings indicate that moderate levels of pruning, ranging from 0.3 to 0.7 sparsity, retain accuracy and align with human expectations for decision-making, suggesting that pruned models maintain a level of “reasonableness” in their predictions. However, a critical pruning threshold was identified, beyond which both accuracy and interpretability decline significantly. These findings suggest that models can be effectively pruned to moderate levels for deployment in resource-constrained environments while maintaining interpretability and reliability.

Finally, this study implemented a novel pruning technique using an explainability-based pruning criterion, leveraging bounding boxes and layer-wise relevance propagation. While this implementation was unsuccessful, it highlighted key discrepancies between human and machine vision classification processes, underscoring the need to understand model decision-making processes.

Prize Categories

Best Software Project

Technologies and Skills

- Machine learning

- Deep Neural Networks

- Software Engineering

- Programming

- PyTorch